Understanding the cost of the cloud and all subsets of that cloud consumption is becoming an ever increasing requirement for DevOps leaders. In fact, FinOps has become the keyword of choice as organizations look to unite the finance and engineering teams under agreed metrics.

Kubernetes falls within the "subset" remit. But Kubernetes cost monitoring is hard. It's an ever-changing environment and workloads that requires a lot of configuration. Unfortunately not many teams do Kubernetes cost monitoring well because of this. In addition, it's hard to understand what resources an application is actually consuming, and whether it's the application or the way the application was configured that could be over consuming cloud resources.

Why measure costs in Kubernetes?

For those organizations that have adopted containers and Kubernetes, cost monitoring quickly becomes a blackhole for platform engineering leaders and the finance team. The biggest questions around spend:

-

Cost allocation: How much am I spending? How should that cost split between teams, applications, and business units?

-

Service ownership: Are my teams enabled to monitor cost for their apps and make changes to lower costs?

-

Right-sizing resources: Are my apps over-provisioned and using too much compute or memory?

Allocation, optimization and management of Kubernetes is understanding and having visibility into associated costs.

The Challenges of Kubernetes Cost Monitoring

For many the challenges of Kubernetes cost monitoring starts with how it is configured:

- Did a developer set the right CPU and memory?

- What application is costing the most and why?

- Can you break up the cloud cost by Kubernetes and then in gradual detail?

As a fast growing startup, we needed a way to better understand the cost of our microservices, especially as customers began adopting various features of Fairwinds Insights. Step one wasn’t actually measuring cost just yet; instead, we developed our open source tool, Goldilocks, to ensure our workloads were properly configured with the right CPU and memory allocations. This step was critical in guaranteeing we weren’t over or under provisioning resources to our most used microservices.

Once we had our requests and limits set, we found our traditional cloud cost tools did not provide a breakdown by Kubernetes workload, namespace or label. This realization made it difficult to understand which microservices or features were driving the most resource utilization in the cluster—and whether or not those features were profitable for us.

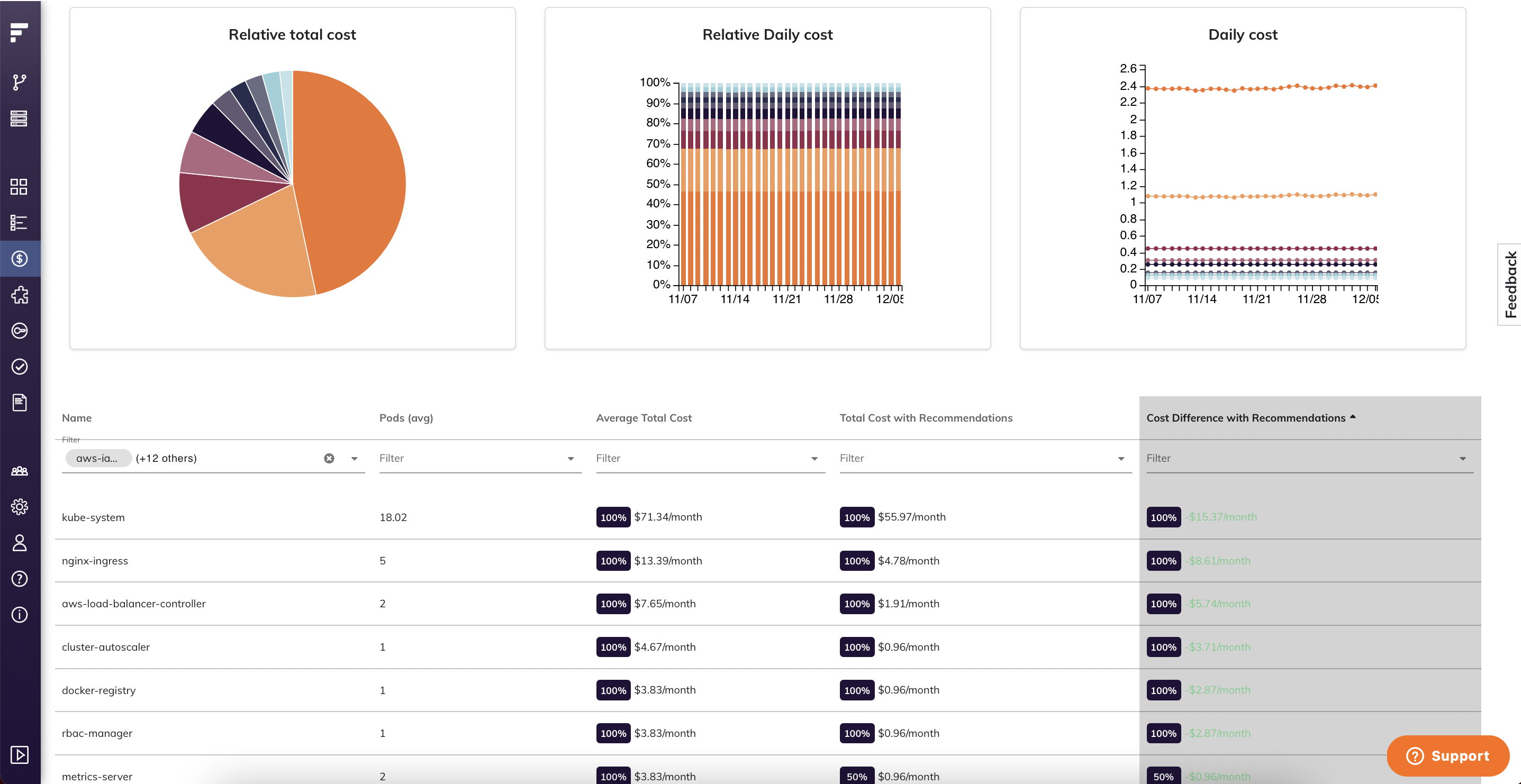

In our customer and community conversations, we realized we weren’t alone. We developed a Kubernetes Cost Allocation feature within Fairwinds Insights, allowing us to configure a blended price per hour for the nodes powering our cluster. With that information, and tracking actual pod and resource utilization using Prometheus metrics, we were able to generate a relative cost of our workloads. This move helped us track our cloud spend, while also determining which features were impacting cost.

Platform engineering teams, especially those who have embraced Kubernetes service ownership, were also asked about the cost of individual teams and products. These requests ultimately come from finance leaders who need visibility into the marginal cost of running different products. Service owners can also use this information to make their own decisions about resource utilization—and find ways to save resources and cost along the way.

Monitor and Manage all Your Kubernetes Costs in one Place

Accurate Kubernetes cost allocation becomes increasingly complex when Kubernetes is used as a multi-tenant / share computing environment. Different teams may use different node types, and workloads are constantly changing, making the price per hour of a node a moving target.

We’re excited to announce that Fairwinds Insights now supports AWS billing integration, allowing teams to use their real cloud bill for calculating costs by workload, namespace or label. With labels, platform engineering teams can “slice-and-dice” accurate workload costs by multiple business dimensions, satisfying the needs of service owners and finance without questioning the underlying node pricing assumptions.

Interested in using Fairwinds Insights? It’s available for free! Learn more here.